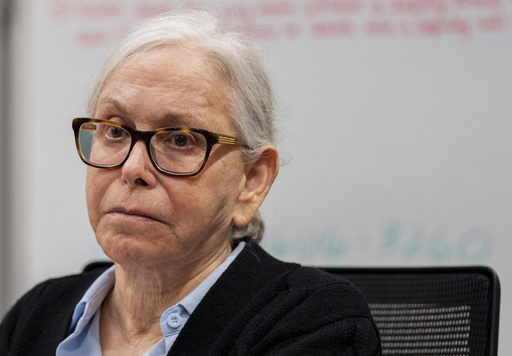

South Florida matriarch sentenced to life in prison for hired killing of her ex-son-in-law

The matriarch of a wealthy South Florida family who was convicted in the hired killing of her former son-in-law has been sentenced to life in prison. Last month, a jury convicted Donna Adelson for her role in the 2014 murder-for-hire of Daniel Markel. A prominent Florida State University law professor, Markel was locked in a bitter custody battle with his ex-wife, Adelson’s daughter. In an emotional statement to the court on Monday, Adelson maintained her innocence. She has pledged to appeal.